In the world of deep learning and machine learning, the ability to leverage a Graphics Processing Unit (GPU) can significantly enhance performance and reduce training time for complex models. PyTorch, a popular open-source machine learning library, provides robust support for GPU acceleration.

However, ensuring that PyTorch is utilizing your GPU effectively can sometimes be challenging. This guide will walk you through the steps to verify if PyTorch is using the GPU, along with various methods and tips to maximize your PyTorch experience.

Understanding GPU and Its Importance in PyTorch

Before diving into the methods for checking GPU usage, it’s essential to understand why GPUs are critical for training machine learning models. Unlike Central Processing Units (CPUs), which are optimized for sequential tasks, GPUs excel at performing multiple calculations simultaneously. This parallel processing capability makes GPUs particularly well-suited for handling the matrix operations that are foundational in deep learning.

In PyTorch, utilizing a GPU can lead to substantial speedups in model training and inference, allowing researchers and developers to experiment with larger datasets and more complex architectures.

Setting Up PyTorch for GPU Usage:

To use a GPU with PyTorch, you first need to ensure that your environment is correctly set up. Here’s a brief checklist:

- Install PyTorch with GPU Support: Make sure you have installed the correct version of PyTorch that supports GPU. You can find installation instructions on the official PyTorch website.

- Check CUDA Installation: CUDA is a parallel computing platform and application programming interface model created by NVIDIA. Ensure that you have a compatible version of CUDA installed on your system. You can check this by running:

nvcc –version

- Install the Appropriate Drivers: Ensure that your NVIDIA drivers are up to date. This is crucial for optimal GPU performance.

Also Read: Where To Sell Used Gpu – Discover GPU Marketplaces!

How to Check if PyTorch is Using GPU?

Now that your environment is set up, let’s explore how to check if PyTorch is utilizing the GPU effectively.

Checking CUDA Availability in PyTorch

The first step is to check if PyTorch can detect your GPU. You can do this by running the following command in your Python environment:

import torch

print(torch.cuda.is_available())

This command returns True if CUDA is available and PyTorch can use the GPU; otherwise, it returns False.

2. Identifying the Current Device

Once you’ve confirmed that CUDA is available, you can check which device PyTorch is currently using. Use the following commands:

device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”)

print(device)

This code will print cuda if a GPU is available and will set the device accordingly.

3. Checking GPU Device Count

If you have multiple GPUs, you might want to check how many GPUs are available. You can do this with:

print(torch.cuda.device_count())

This command returns the number of GPUs that PyTorch can access.

4. Getting the Name of the GPU

To check the specific GPU being used, you can retrieve its name with the following command:

if torch.cuda.is_available():

print(torch.cuda.get_device_name(0))

This will display the name of the first GPU detected by PyTorch.

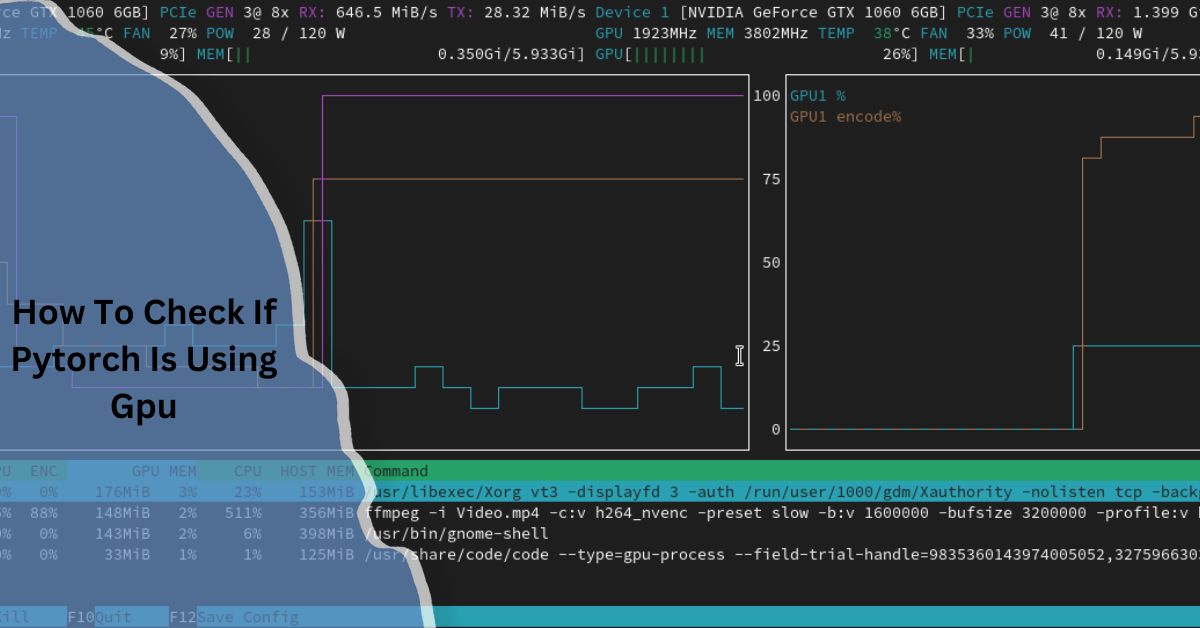

5. Monitoring GPU Usage

While the above commands help check if PyTorch is set up to use a GPU, monitoring GPU usage during model training can provide further insights. You can use the following tools:

- NVIDIA SMI: The NVIDIA System Management Interface (SMI) is a command-line utility that provides detailed information about your GPU, including memory usage, temperature, and running processes. You can run it in your terminal:

- PyTorch’s built-in functions: PyTorch provides functions to monitor GPU memory usage, such as:

print(torch.cuda.memory_allocated())

print(torch.cuda.memory_reserved())

These functions give you information about the currently allocated and reserved GPU memory, allowing you to gauge your model’s memory consumption.

Best Practices for Using GPU with PyTorch:

To maximize your experience with PyTorch on GPU, consider the following best practices:

Optimize Data Loading

When training models, the speed at which data is loaded can become a bottleneck. Use PyTorch’s DataLoader with multiple workers to load data in parallel, minimizing the time the GPU sits idle.

Use Mixed Precision Training

Mixed precision training allows you to use both 16-bit and 32-bit floating-point numbers during training, which can speed up computations and reduce memory usage. You can use the torch.cuda.amp module for this purpose.

Monitor GPU Utilization

Regularly check your GPU utilization using tools like nvidia-smi. This allows you to identify bottlenecks and ensure that your GPU resources are being fully utilized during training.

void Memory Leaks

When using GPU, be cautious about memory leaks that can occur if tensors are not properly released. Use torch.cuda.empty_cache() to free up unused memory, but be aware that this does not reduce memory fragmentation.

Also Read: Do GPU Brands Matter – A Comprehensive Guide!

Troubleshooting Common Issues

While working with PyTorch on GPU, you might encounter some common issues. Here are a few troubleshooting tips:

- CUDA Error: Out of Memory: This error occurs when your model requires more GPU memory than is available. To resolve this, you can try reducing the batch size or simplifying your model architecture.

- CUDA is Not Available: If torch.cuda.is_available() returns False, check your CUDA installation and ensure your NVIDIA drivers are up to date. You may also need to reinstall PyTorch with the appropriate CUDA version.

- Slow Performance: If you notice that your GPU is not being utilized effectively (as seen in nvidia-smi), consider profiling your code to identify bottlenecks. Tools like PyTorch’s built-in profiler or TensorBoard can help you analyze performance.

FAQ’s:

1. What is PyTorch?

PyTorch is an open-source machine learning library developed by Facebook’s AI Research lab, widely used for deep learning applications. It provides flexibility and speed through its dynamic computation graph and tensor operations.

2. Why should I use a GPU with PyTorch?

Using a GPU can significantly accelerate the training and inference processes in deep learning, allowing you to work with larger models and datasets more efficiently than with a CPU alone.

3. How do I install PyTorch with GPU support?

To install PyTorch with GPU support, visit the official PyTorch website and use the installation selector to choose the appropriate version based on your system configuration, CUDA version, and package manager.

4. How can I check if my GPU is supported by PyTorch?

You can check if your GPU is supported by verifying the compatibility of your NVIDIA GPU with the version of CUDA that your PyTorch installation requires. Refer to the official NVIDIA website for a list of compatible GPUs.

5. What does torch.cuda.empty_cache() do?

The torch.cuda.empty_cache() function releases all unused memory from the GPU, but it does not free memory held by tensors. This can help prevent memory fragmentation and improve memory management.

6. Can I use multiple GPUs with PyTorch?

Yes, PyTorch supports multi-GPU training using data parallelism. You can use torch.nn.DataParallel to distribute your model across multiple GPUs for faster training.

7. How do I profile my PyTorch code for performance?

You can use PyTorch’s built-in profiler by importing torch.profiler. This tool provides detailed insights into the performance of your code, allowing you to identify bottlenecks and optimize execution.

8. What should I do if I encounter a CUDA error?

If you encounter a CUDA error, first check your CUDA installation and ensure your drivers are up to date. You may also want to reduce your batch size or simplify your model to avoid out-of-memory errors.

9. Is it possible to train models without a GPU?

Yes, you can train models using PyTorch on a CPU. However, training will be significantly slower compared to using a GPU, especially for large models and datasets.

10. How can I monitor GPU usage while training?

You can monitor GPU usage in real-time by using the NVIDIA SMI tool in the terminal. Additionally, PyTorch provides functions to check allocated and reserved memory during training.

Closing Remarks:

Checking if PyTorch is using GPU is crucial for optimizing performance in your deep learning projects. By following the steps outlined in this guide, you can easily verify GPU usage, monitor performance, and troubleshoot any issues that arise. Leveraging the power of GPUs can significantly enhance your model training and inference times, allowing for more extensive experimentation and development.

Read More: