Graphics Processing Units (GPUs) play a critical role in modern computing, powering everything from gaming to deep learning. With the rise of AI and machine learning applications, GPUs have become even more indispensable for handling intensive computations.

To free up GPU memory, close unnecessary applications and processes using the GPU. For tasks like deep learning, you can clear memory in programming environments like PyTorch with a command. Updating drivers and reducing graphical settings also help free up GPU resources.

However, one common issue users encounter is the saturation of GPU memory, which can lead to decreased performance and crashes. This article delves into practical strategies for freeing up GPU memory, boosting system performance, and extending the longevity of your hardware.

Understanding GPU Memory:

Before diving into methods to free up GPU memory, it’s important to understand what GPU memory (often referred to as VRAM) is. Similar to RAM in your CPU, GPU memory stores data that the GPU needs to access quickly. The more complex your graphical tasks (such as rendering a 3D scene or training a deep learning model), the more memory is required.

Why Freeing Up GPU Memory is Important

Over time, as GPU workloads increase, memory can become cluttered with unnecessary data. When GPU memory is fully occupied, it forces the system to either slow down processing or offload tasks to the CPU, which is less efficient at handling graphics-intensive tasks. Freeing up GPU memory improves speed, avoids memory overflows, and helps prevent system crashes, allowing for smoother performance across tasks.

Identifying GPU Memory Usage:

To effectively free up GPU memory, it’s essential to first identify how much memory is being used and by which applications. There are several tools and methods for monitoring GPU memory usage.

- NVIDIA-SMI (NVIDIA System Management Interface): For users with NVIDIA GPUs, the nvidia-smi command-line tool provides detailed information about the current state of the GPU. Running this command shows memory usage, processes that are utilizing the GPU, and available memory. The output allows users to identify processes that may be hogging memory and decide which ones to terminate.

- Task Manager (Windows): Windows users can check GPU memory usage directly in the Task Manager. By navigating to the “Performance” tab, selecting “GPU,” and viewing the memory usage, you can determine which applications are currently consuming VRAM.

- System Monitors (Linux and macOS): Linux and macOS users can monitor GPU memory usage using system tools such as nvidia-smi, htop, or other third-party applications that display GPU utilization metrics.

Methods to Free Up GPU Memory:

Once you have identified the memory usage, there are various methods you can use to free up GPU memory. Below are effective strategies tailored to both casual users and professionals working in fields like gaming, deep learning, and 3D modeling.

1. Close Unnecessary Applications

One of the simplest ways to free up GPU memory is by closing any background applications that are utilizing the GPU. Web browsers, video editors, or even certain productivity software can consume VRAM without you realizing it.

2. Terminate Specific GPU Processes

Using tools like nvidia-smi, users can manually terminate specific processes that are taking up excessive GPU memory. For example, if you’re running multiple instances of a machine learning model, you can use this tool to free up memory by stopping models that are no longer in use.

nvidia-smi –gpu-reset

This command resets the GPU and clears unnecessary memory without restarting the system, offering a quick way to restore memory resources.

3. Clear GPU Memory in Programming Environments

For developers working with machine learning libraries like TensorFlow or PyTorch, GPU memory can quickly become filled as models are trained. To prevent memory leaks or to clear VRAM between experiments, most frameworks provide built-in methods to free memory.

For example, in PyTorch, you can release unused memory with:

torch.cuda.empty_cache()

This command ensures that any unused memory is released back to the system, allowing for more efficient memory usage.

In TensorFlow, users can enable dynamic memory growth, which ensures that the framework only uses as much GPU memory as it needs:

gpu_options = tf.compat.v1.GPUOptions(allow_growth=True)

config = tf.compat.v1.ConfigProto(gpu_options=gpu_options)

This prevents TensorFlow from reserving the entire GPU memory upfront, allowing other applications to utilize the GPU more effectively.

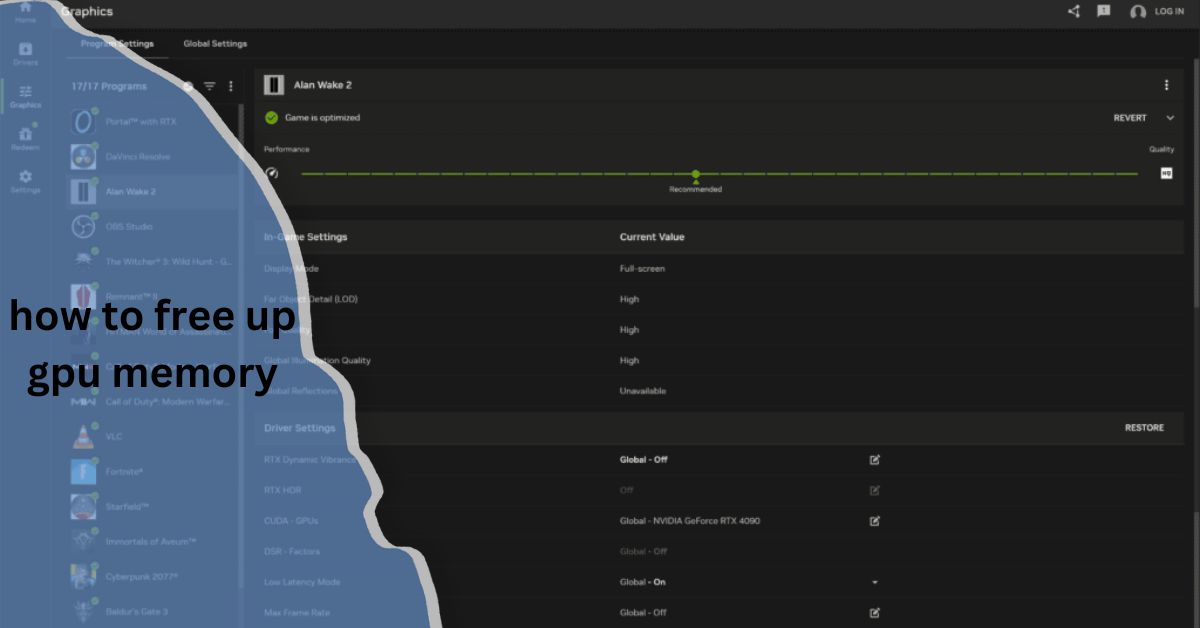

4. Reduce Texture and Model Resolutions

In gaming or 3D modeling, reducing the resolution of textures, models, or other assets can significantly decrease the amount of VRAM required. Most modern software provides options to adjust graphical settings, allowing you to lower the load on the GPU. This not only improves performance but also prevents VRAM overload.

5. Manage GPU Memory with Docker

If you’re running containerized applications, such as those in Docker, it’s essential to manage GPU memory within the container to prevent excessive usage. Docker provides options for limiting GPU memory, ensuring that containers don’t monopolize resources:

docker run –gpus ‘”device=0″‘ –memory 4g <image-name>

By specifying memory limits, you can control the amount of GPU memory available to each container, optimizing overall performance.

6. Update GPU Drivers

Outdated drivers can sometimes cause inefficient memory usage or lead to memory leaks. Regularly updating your GPU drivers can prevent such issues. Both NVIDIA and AMD frequently release driver updates that include performance improvements and optimizations for better memory management.

7. Disable Background GPU Processes

Many systems run background processes that may use GPU memory without your knowledge. For example, hardware acceleration in browsers or streaming services can consume VRAM. Disabling hardware acceleration in non-critical applications can free up significant GPU resources.

For example, in Chrome, you can disable hardware acceleration by navigating to:

Settings > Advanced > System > Use hardware acceleration when available > Toggle Off

8. Optimize CUDA Memory in Deep Learning Models

For deep learning professionals, optimizing CUDA memory allocation is essential for efficiently running models on the GPU. If your CUDA memory is being over-utilized, you can use functions like cudaMemGetInfo() to check available memory and optimize usage accordingly. Freeing up unused CUDA allocations will help in ensuring smoother workflows during heavy computations.

Preventative Measures for Efficient GPU Memory Management:

Freeing up GPU memory is not always a reactive process. By following best practices, users can ensure their GPU operates efficiently without running into memory constraints.

1. Use Memory Profiling Tools

Memory profiling tools help monitor GPU memory consumption in real-time and over extended periods. Tools like Nsight Systems (for NVIDIA GPUs) or AMD’s Radeon GPU Profiler provide detailed breakdowns of memory usage, helping you identify memory-intensive applications and optimize them accordingly.

2. Optimize Application Settings

Many professional applications, especially in fields like 3D rendering or gaming, allow users to control how much memory is allocated for specific tasks. Tweaking these settings to fit your needs can prevent memory overuse.

3. Regularly Restart Your GPU

Over time, minor memory leaks can accumulate, leading to reduced available GPU memory. Restarting your GPU or rebooting your system clears out these leaks and restores the memory to its full capacity. On Linux systems, you can restart the GPU using:

sudo service gdm restart

For Windows users, a system reboot may be the most efficient way to restore GPU memory.

Common Challenges in Freeing Up GPU Memory:

Even with all these techniques, users can face challenges in freeing up GPU memory. Some applications may not release memory immediately after being closed, or you may find that multiple programs are demanding GPU resources simultaneously. In such cases, it’s important to prioritize tasks and allocate GPU memory based on the most critical applications.

Another common issue is memory fragmentation, where memory is freed, but in small, non-contiguous blocks. In this case, even though the system reports available memory, it may not be usable for larger tasks. Tools that defragment GPU memory can help resolve this issue.

FAQ’s:

1. How can I check how much GPU memory is being used?

You can monitor GPU memory usage through tools like NVIDIA’s nvidia-smi or Task Manager on Windows. These provide detailed insights into how much VRAM is being utilized by different applications.

2. What causes GPU memory to fill up?

GPU memory can fill up due to resource-heavy applications such as gaming, deep learning tasks, video rendering, or even multiple browser tabs running hardware acceleration.

3. What is VRAM, and how is it different from system RAM?

VRAM (Video RAM) is the memory used by the GPU to store graphical data, whereas system RAM is used by the CPU for general tasks. Both serve similar purposes but for different processing units.

4. Can updating GPU drivers free up memory?

Updating GPU drivers can improve memory management and fix bugs that may cause memory leaks or inefficient usage, freeing up more memory.

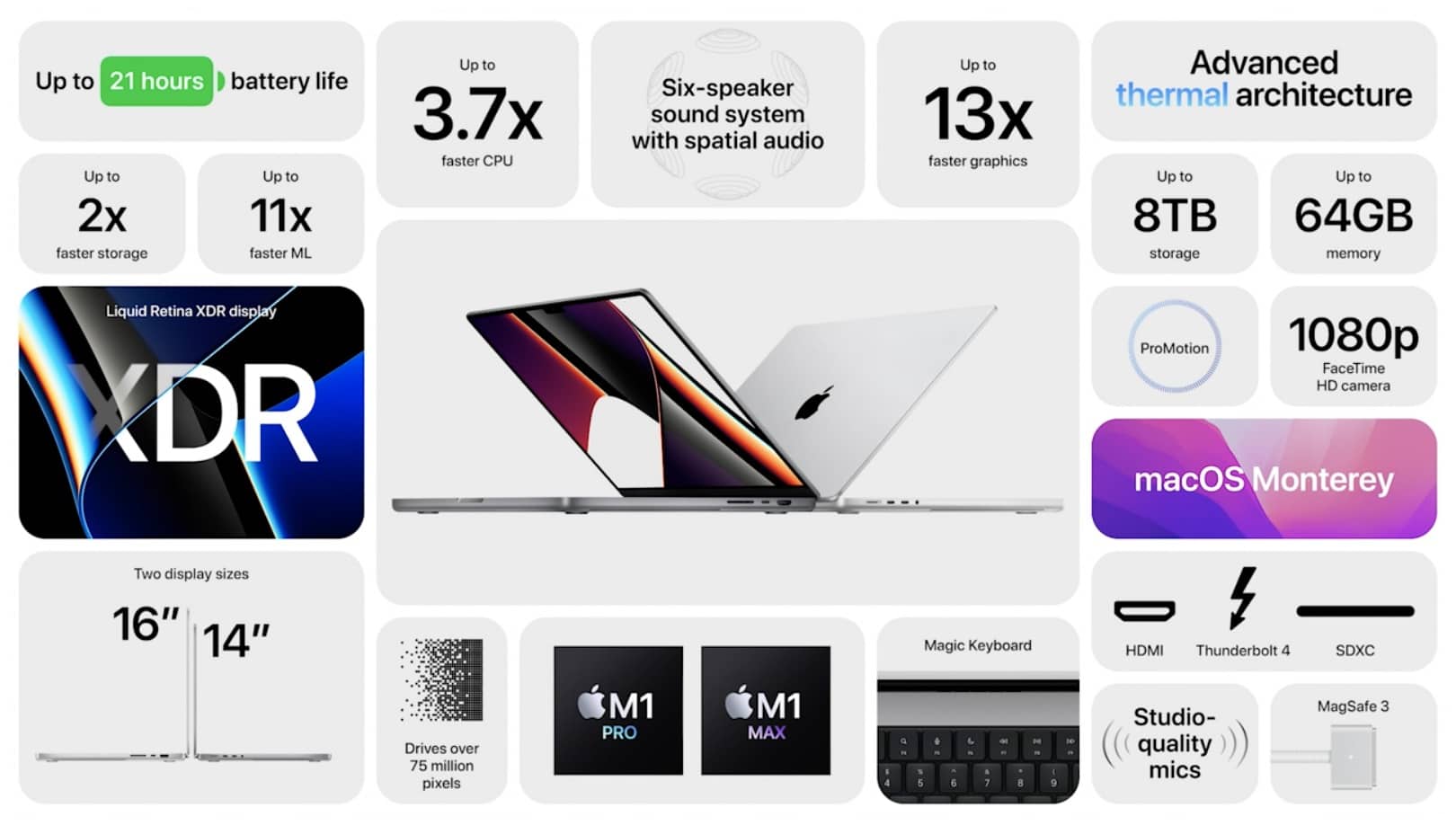

5. How much GPU memory do I need for gaming?

For modern games, a GPU with at least 6-8GB of VRAM is recommended. However, this depends on the game’s graphical settings and resolution.

6. Can I manually free GPU memory in programming environments?

Yes, in environments like TensorFlow or PyTorch, you can manually free GPU memory by using commands like torch.cuda.empty_cache() or enabling dynamic memory growth.

7. Does lowering graphical settings free up GPU memory?

Yes, reducing the resolution of textures, models, and overall graphics quality can free up a significant amount of GPU memory.

8. Can I free GPU memory without restarting my computer?

Yes, by terminating unnecessary processes, using commands like nvidia-smi –gpu-reset, or clearing memory caches, you can free up GPU memory without a full system restart.

9. What happens if GPU memory runs out?

If GPU memory runs out, the system may crash, slow down, or offload tasks to the CPU, resulting in reduced performance. Preventing memory overload is key to ensuring smooth operation.

Closing Remarks:

Freeing up GPU memory is essential for maximizing performance, especially in resource-intensive tasks such as gaming, deep learning, and 3D modeling. By understanding how GPU memory works, monitoring your system’s usage, and implementing the strategies outlined in this article, you can ensure that your GPU runs efficiently, offering optimal performance without the risk of crashes or slowdowns.